Four Decades of Intelligent Machines

I do not think that the radio waves I have discovered will have any practical application. Heinrich Hertz

It’s November of 1904. An engineer named John Ambrose Fleming is hunched over a contraption of glass and metal in his small, cluttered lab at University College London. The glow of the first practical vacuum tube fills the room as he places it into a circuit. This humble diode, capable of amplifying and switching electronic signals, provides the first glimmers of the coming age of electronic computers.

Fast forward to the early 1940s. The Second World War rages on. The Ballistic Research Laboratory in Maryland needs a faster way to calculate ballistic firing tables for the artillery. So U.S. Army Ordnance Corps funds the construction of ENIAC, the world’s first general-purpose, electronic computer. ENIAC hums into life in 1945. It looks more like a room than a tool; A vast, clattering array of metal and wire, vacuum tubes and punch cards, weighing in at a staggering 30 tons. And yet, it crunches numbers for the war effort with an accuracy and speed unparalleled by human hands, marking the dawn of a new era in technology.

The decades following the war usher in the era of mainframe computers, precursors to the corporate technology boom. It is now early 1980s. Computers have begun to shrink and are now finding their way out of university labs and corporate mainframes, and into our homes. They have now become desk-bound. Behold! The era of internet and personal computers is upon us. The static of a dial-up connection becomes a familiar sound in homes across the globe, as the World Wide Web weaves its way into everyday life.

This trend continues for the next few decades as computers shrink further to become lap-bound, and eventually palm-bound. They permeate everything; from refrigerators that keep track of groceries, to watches that track steps and heartbeats, and into cars that navigate themselves. The world is interconnected like never before. The Internet of Things, they call it. It’s a world where computational power is at everyone’s fingertips. It is now July 2023, almost seven months since this AI chatbot named ChatGPT took the world by storm, setting off a domino effect of unprecedented growth in the capabilities of artificial intelligence.

The 40 Year Cycle

A recurring pattern can be observed if one examines the history of computing. It seems that roughly every 40 years or so, a major technological breakthrough happens that drives growth and sets the direction for the next 40 years.

1900 - 1940

This period began with the invention of radio and vacuum tubes. The progress during the subsequent years laid the crucial groundwork for the development of electronic computers and set the stage for the technological revolution that was to follow.

1940 - 1980

The beginning of this period coincides with WWII, with the rest of it spanning the majority of the Cold War. For better or worse, wars have always been catalysts of change in human societies, and this era was no exception, resulting in the birth of computers, nuclear energy, space exploration, and the first commercially available antibiotics.

1980 - 2020

This period’s beginning was marked by the widespread adoption of personal computers like Apple II (launched in 1977) and IBM PC (introduced in 1981) that resulted in a huge explosion of information and technology in all areas of life. The advent of accessible computing and the internet drove significant changes and advancements during this time.

2020 - 2060?

Now it seems that we are in another forty-year cycle, one whose beginning is heralded by an artificial intelligence boom. This, in the words of Ray Kurzweil, is the Age of Intelligent Machines. At the dawn of this new age, we find ourselves asking; what do these next four decades really have in store for us? Honestly, I am as clueless as you are, but we can try to guess based on historical trends and where we presently stand.

I believe that in the coming decades (or perhaps in a matter of just years), we are going to witness several breakthroughs in not just artificial intelligence, but fusion power, genetic engineering, space exploration, neural interfaces, and entirely novel ways to build computers. Without a doubt, these breakthroughs will be at least partially driven by AI. We have only begun to scratch the surface and have yet to tap into AI’s immense potential in areas ranging from material science to protein folding and more.

Accelerating Change

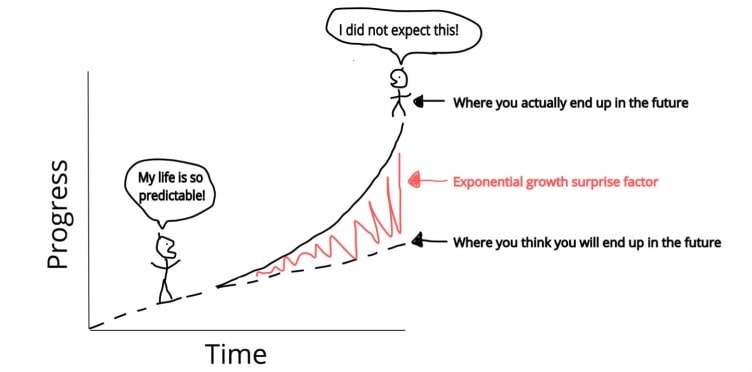

One important thing to keep in mind is that the 40 year cycle is not really an empirical metric by any means. It’s just an observation that I used to weave a narrative and to get you to read my ramblings. In fact, the rate of technological advancement is not constant at all; It’s cumulatively exponential, i.e. With each technological discovery or innovation, the time to reach the next one becomes increasingly shorter, accelerating the pace of advancement. This is known as the Law of Accelerating Returns.

In other words, not only is technological progress accelerating; the rate at which it is accelerating, is also accelerating. Let that one sink in.